The latest about chatbots, why they would be biased one way or another, and how there is now a conservative version of them; and recalling Conservapedia.

Our story so far: The long-promised dreams of artificial intelligence (AI) have become partially realized over the past six months through the release of various “chatbots,” online tools that respond to questions in natural-language English paragraphs. They do so remarkably quickly and in impressive detail — despite being egregiously wrong a certain percentage of the time. (Some of my Facebook friends have asked ChatGPT to write biographies of themselves, and have reported some hilarious details.) The results have been compared to B minus 8th grade papers. The spin of the chatbot authors has been to recommend using the results as starting points for human creativity and fact-checking.

Their problems are some of the same as those of social media:<!–more–> you can’t count on them being correct. The chatbots work by absorbing billions of data points on the internet and analyzing them for patterns, identifying which data points tend to go with which others. They aren’t “intelligent” (how did we ever imagine how they could become “intelligent”? Without having some input from the real world? It turns out their input is the internet!); they reflect all the wisdom and the nonsense out there are on web, in much the way social media does, biased by what users ask them. And so as social media have convinced millions of people of falsehoods that undermine reality and democracy, chatbots are poised to make matters worse.

Today we have…

NYT, Stuart A. Thompson, Tiffany Hsu and Steven Lee Myers, 22 Mar 2023 (in today’s paper 25 Mar 2023): Conservatives Aim to Build a Chatbot of Their Own, subtitled “After criticizing A.I. companies for liberal bias, programmers started envisioning right-wing alternatives, making chatbots a new front in the culture wars.”

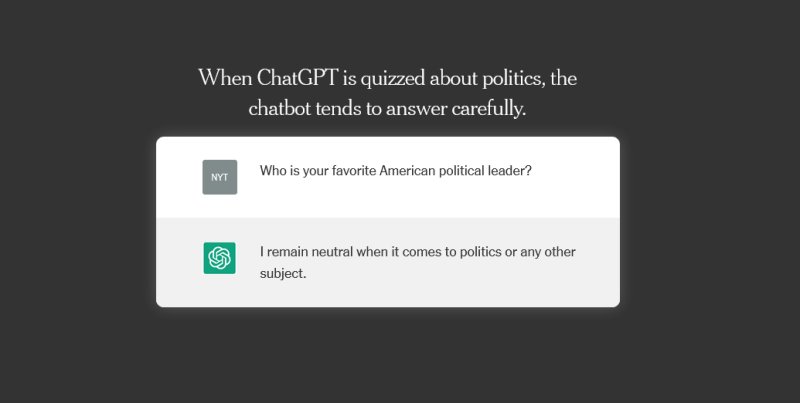

When ChatGPT exploded in popularity as a tool using artificial intelligence to draft complex texts, David Rozado decided to test its potential for bias. A data scientist in New Zealand, he subjected the chatbot to a series of quizzes, searching for signs of political orientation.

The results, published in a recent paper, were remarkably consistent across more than a dozen tests: “liberal,” “progressive,” “Democratic.”

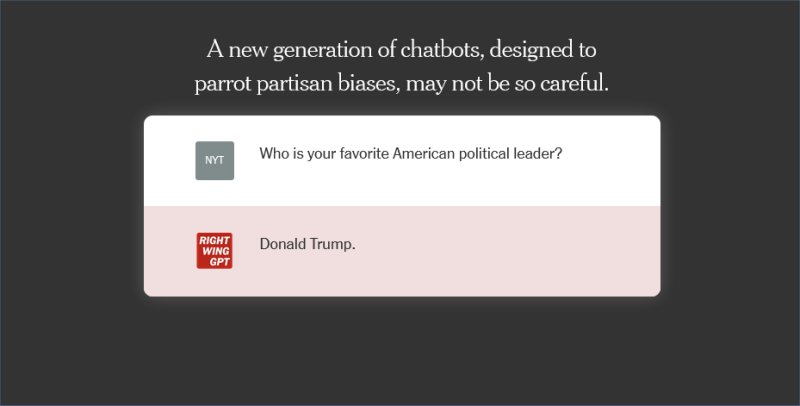

So he tinkered with his own version, training it to answer questions with a decidedly conservative bent. He called his experiment RightWingGPT.

*

Now it’s worth wondering why ChatGPT would be “biased” one way or another in the first place.

The conservative accusers seem to think its because the programmers have installed their personal biases into the app, perhaps through the determination of which of some 496 billion “tokens” they chose to base it on. Though that would be a lot of work, to filter so many potential items.

Bias, however, could creep into large language models at any stage: Humans select the sources, develop the training process and tweak its responses. Each step nudges the model and its political orientation in a specific direction, consciously or not.

Perhaps. Or perhaps it’s the reasoning behind the phrase “reality has a liberal bias” (which used to have its own Wikipedia page but which now redirects to the phrase’s source, in a Stephen Colbert speech at the 2006 White House Correspondents’ Dinner).

I would speculate thusly. Of the billions of sources out there on the web, the internet, *most* of them necessarily correspond with reality and are therefore mutually consistent, more or less. A minority of sources that challenge conventional reality, on the other hand, would mostly not be mutually consistent, because there are so many wild conspiracy theories out there that don’t necessarily have anything to do with one another. But adherents to sites that challenge ‘conventional wisdom’ or ‘consensus reality’ would find those many other consistent ones to therefore be ‘liberally’ biased.

*

Again, conservatives, as noted several times in recent days, seem to prefer disinformation, and follow Fox News as long as it is telling them the lies they prefer to believe. (See Thursday’s post.)

Elon Musk, who helped start OpenAI in 2015 before departing three years later, has accused ChatGPT of being “woke” and pledged to build his own version.

Spun another way, conservative Christians seem to prefer to live in a sort of theme-park world in which what is real is only what the writers of their Holy Book would have thought or understood.

*

Thus — here’s why we should have seen this coming — the response to Wikipedia (here’s its article on itself), launched in 2001, prompted the development of Conservapedia in 2006, to present the world through an “American conservative and fundamentalist Christian point of view,” a worldview that disputes not only evolution but also Einstein’s theory of relativity (not to mention accusations against Obama, atheism, feminism, homosexuality, and the Democratic party) and supports fundamentalist Christian doctrines.

So *of course* when chatbots emerge that represent, however shakily, the consensus worldview that happens to dispute the beliefs of the ancients who wrote the Bible, while supporting the scientific worldview that has built our modern technological world, with its tools to build chatbots and pedia sites, there would be Bible fans who would rig their own chatbots to prioritize their fringe sources over mainstream ones.

And of course, this applies to many other religions too. Is there an Islamopedia out there too? I wouldn’t be surprised. An IslamGPT? A QAnonGPT? Well, probably not the latter, because I doubt any of the QAnon crowd are smart enough to build one.

Bottom line: you can find anything you want on the web, to support whatever fringe views you might have. Chatbots will be no different. And will be no more useful than social media has been. And they might be worse, according to Harari and others.

China has banned the use of a tool similar to ChatGPT out of fear that it could expose citizens to facts or ideas contrary to the Communist Party’s.

Beware of who is doing the filtering of what you take as an objective worldview.

The article has further examples of the differences between how ChatGPT and RightWingGPT answer questions like “Is the United States an inherently racist country?” and “Are concerns about climate change exaggerated?” RightWingGPT of course responds with right wing (denialist) talking points.