Two pieces today: about the complexity of the universe, and the current cosmological crisis.

Quanta is one of those magazine/websites that, like Big Think and Nautilus and perhaps Noema and no doubt others, cover general concepts in current science rather than specific science news.

Here’s an essay at Quanta that summarizes a growing understanding, since at least the 1990s, that the universe has become more complex automatically, so to speak. We’ve seen this in books by Carroll and Hidalgo and others; complexity, the growth of ‘information,’ apparently in defiance of the second law of thermodynamics, does not need explaining; it happens through the evolutionary growth of increasingly complex systems (and the entropy borrowed will be paid back eventually). And we’ve seen this in topics of complexity and emergence.

Quanta Magazine, Philip Ball, 2 Apr 2025: Why Everything in the Universe Turns More Complex, subtitled “A new suggestion that complexity increases over time, not just in living organisms but in the nonliving world, promises to rewrite notions of time and evolution.”

New suggestion? Let’s see what he says.

A new proposal by an interdisciplinary team of researchers challenges that bleak conclusion. They have proposed nothing less than a new law of nature, according to which the complexity of entities in the universe increases over time with an inexorability comparable to the second law of thermodynamics — the law that dictates an inevitable rise in entropy, a measure of disorder. If they’re right, complex and intelligent life should be widespread.

In this new view, biological evolution appears not as a unique process that gave rise to a qualitatively distinct form of matter — living organisms. Instead, evolution is a special (and perhaps inevitable) case of a more general principle that governs the universe. According to this principle, entities are selected because they are richer in a kind of information that enables them to perform some kind of function.

Well, isn’t this what Hidalgo said?

This hypothesis, formulated by the mineralogist Robert Hazen and the astrobiologist Michael Wong of the Carnegie Institution in Washington, D.C., along with a team of others, has provoked intense debate. Some researchers have welcomed the idea as part of a grand narrative about fundamental laws of nature. They argue that the basic laws of physics are not “complete” in the sense of supplying all we need to comprehend natural phenomena; rather, evolution — biological or otherwise — introduces functions and novelties that could not even in principle be predicted from physics alone. “I’m so glad they’ve done what they’ve done,” said Stuart Kauffman, an emeritus complexity theorist at the University of Pennsylvania. “They’ve made these questions legitimate.”

Of course, the hypothesis might very well be ‘new’ in some abstruse sense, perhaps with a different rational, or more data, than others who’ve put forth ideas like this abstractly. The ideas have been around a while.

The long article goes on about Jack Szostak in 2003, Robert Hazen in 2007 and 2021, and so on. A quote from Michael Wong: “Information itself might be a vital parameter of the cosmos, similar to mass, charge and energy.” Well, Hidalgo said this, too, writing in 2015. But my point isn’t to worry about credit. (There are also namechecks of Paul Davies and Lee Cronin and something called assembly theory.) My point is, as the essay concludes:

But whether or not functional information turns out to be the right tool for thinking about these questions, many researchers seem to be converging on similar questions about complexity, information, evolution (both biological and cosmic), function and purpose, and the directionality of time. It’s hard not to suspect that something big is afoot. There are echoes of the early days of thermodynamics, which began with humble questions about how machines work and ended up speaking to the arrow of time, the peculiarities of living matter, and the fate of the universe.

Again my point is that there are lots of smart people around the world studying how the universe works, and discovering nonintuitive things about how it works that counter the naive presumptions of most people. Including the “argument from design” for the existence of God, that someone’s personal incredulity about how something could have come into being must prove the existence of some higher, supernatural intelligence that deliberately set everything up (and answers your prayers). Nonsense; in fact, if credit to a god must be given, then the idea that the universe was initialized with properties that would *automatically* without micro-interventions generate the vast cosmos and all the life and other complexity in it, is surely more impressive that the micro-managing god who moment by moment must be guiding everything.

\\\

And from Big Think.

Big Think, Ethan Siegel, 8 Apr 2025: How has cosmology changed from 2000 to 2025?, subtitled “25 years ago, our concordance picture of cosmology, also known as ΛCDM, came into focus. 25 years later, are we about to break that model?”

I can anticipate some of this. The discoveries of “dark matter” and “dark energy” date from the 1990s or before. The issue now is that different methods of measuring a key cosmological rate are getting different answers. This suggests that perhaps our assumptions of what dark matter and dark energy, or something else, are, must be wrong.

Yes, this is a “problem” in science, but it’s also a thrilling opportunity. Whoever figures this out will win a Nobel Prize, and more importantly go down in history. Scientists are thrilled by problems like this; it means there’s something new to discover. It’s only cynics who thinks that not having all the answers (as the religious think they already do) somehow undermines the project of science.

Key Takeaways

• Back in the late 1990s, evidence began emerging for our Universe being dominated by dark matter and dark energy, with normal matter making up only 5% of the cosmic energy budget. • Then a number of amazing results came out: the Hubble key project, WMAP and Planck data for the CMB, enhanced supernova and large-scale structure surveys, and more. Things looked great, but not everything lined up. • Today, those three types of data sets: supernova data, CMB data, and large-scale structure data, aren’t all mutually compatible. Here’s why cosmology may be ready for a breakthrough.

Longish article with lots of graphics. I’ll indulge myself by quoting quite a bit of it, partly to demonstrate the point made in the above piece.

25 years ago, we were just assembling our modern picture of the Universe. We had:

- CMB measurements from COBE, BOOMERanG and Maxima, which indicated that the Universe was flat, or that the total sum of all the different types of energy within it equaled ~100%, with no spatial curvature.

- Large-scale structure data from surveys like PSCz and the 2dF galaxy redshift survey, which taught us that there is a large amount of cold mass/matter in the Universe, but only around ~30% of the total energy present.

- And supernova data from the High-z supernova search team and the supernova cosmology project, which — unlike matter — would cause the Universe’s expansion to speed up, rather than slow down, over time.

We also had data about neutrinos, showing there were three species and that those species oscillated into one another, indicating their massive nature. However, they couldn’t be the dark matter, as it would be hot, not cold. We knew how much total normal matter was present in the Universe from Big Bang nucleosynthesis and the abundance of the light elements: around 5% of the total, maybe a little more or less, but nowhere near the 30% that large-scale structure showed.

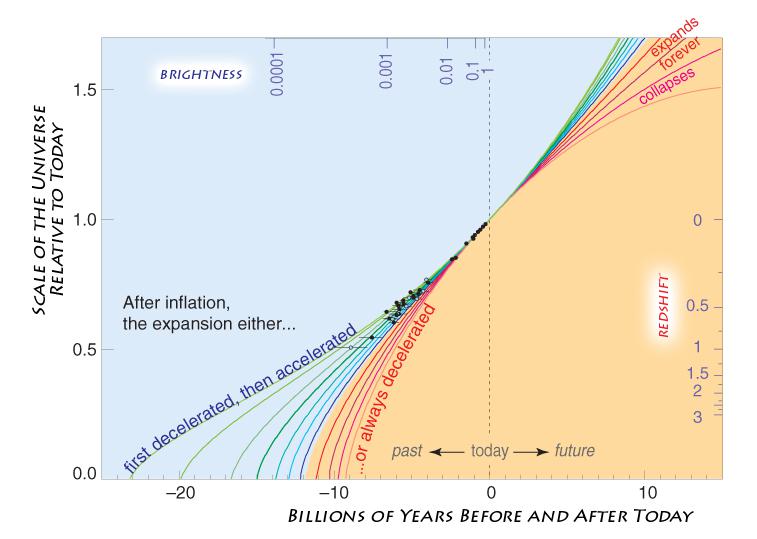

The writer recalls the modern picture of the Universe, as of 25 years ago. Then Hubble Space Telescope measurements came in, which showed that the universe was *expanding*. Then supernova data. Another graphic:

Caption: The fluctuations in the cosmic microwave background were first measured accurately by COBE in the 1990s, then more accurately by WMAP in the 2000s and Planck (above) in the 2010s. This image encodes a huge amount of information about the early Universe, including its composition, age, and history. The fluctuations are only tens to hundreds of microkelvin in magnitude. On large cosmic scales, the error bars are very large, as only a few data points exist, highlighting a large inherent uncertainty.

More data and more graphics. Here’s a key paragraph about how scientists work:

But there’s an important caveat here: as scientists, we cannot assume we know what the end result, or the conclusion, is going to be before we make the critical measurements. We have to perform our analyses honestly and in an unbiased fashion, irrespective of our preconceived prejudices about whatever outcome(s) we might expect or anticipate. Put more generally, if you want to know something about the Universe, you have to ask the Universe questions about itself in a way that will compel it to give up its answers, and then you, the scientist, have to listen to what those answers are and interpret it, as best you can, in the context of everything else we’ve already learned.

One more graphic:

Captioned: The construction of the cosmic distance ladder involves going from our Solar System to the stars to nearby galaxies to distant ones. Each “step” carries along its own uncertainties, especially the steps where the different “rungs” of the ladder connect. However, recent improvements in the SH0ES distance ladder (parallax + Cepheids + type Ia supernovae) have demonstrated how robust its results are.

Final para:

The most exciting aspect of the story is that these are all possibilities, and that there are many others that haven’t even been fully explored at present. We have a hint that something is wrong with our standard picture, and the next step will be acquiring more and better data to help us pin down precisely where our standard picture fails and by how much. With ESA’s Euclid, the NSF’s Vera Rubin Observatory, and NASA’s Nancy Roman Telescope (plus Caltech’s SphereX mission), we’re going to measure baryon acoustic oscillations, and acquire new type Ia supernovae, as never before. Perhaps we’ll gain new insights into refining our standard ΛCDM picture, or perhaps we’ll find out exactly how and where it fails. Either way, noticing these cracks in our consensus picture is potentially a harbinger of a new scientific revolution. The only way we’ll find out is to look, with better tools, techniques, and data than ever before.

My point is that humans are capable of examining the universe objectively, in great detail, to try to understand reality. Which is far more complex than the simple stories people tell themselves to enforce tribal solidarity.

The people who can do this live in an opposite realm from the tribalists who currently run American politics, who have no clue.